The development of artificial intelligence has been going on for decades now, in the light of ChatGPT it seems to have outpaced humanity in the area of text, image and video manipulation. Naturally, it led to discussions over the ethical usage and ramifications on society. With surprising ease, anyone can use it and make it look deceptively real.

In this blog entry, we shall therefore focus on the new responsibilities and think about approaches how to deal with this challenge. What I mention here may have already been said or written somewhere else, or you yourself thought and wrote about it. The more the merrier! What won’t be subject of this discussion is any hypothetical super AI that could theoretically exist in the far future. Without furder ado, we shall begin.

It shall be understood that this blog entry contains mostly thoughts and measures that need to be refined, contributing to a public discussion that is essential for our future.

It is also important that we don’t lose sight of existential matters such as the climate crisis, but we focus on that subject another time.

What is Artificial Intelligence?

Before we begin this discussion, it is best we start by defining what it is we are having a discussion about. For the definition, I use the one given by the Oxford English Dictionary:

„[Artificial Intelligence is] the capacity of computers or other machines to exhibit or simulate intelligent behaviour […]„

Practically speaking, you ask ChatGPT a question and it attempts to answer it as if you were speaking to a human. Whether it already exhibits intelligent behaviour or merely simulates intelligent behaviour is a different question. Here, it is not relevant.

Its answers, of course, are only as good as the information and database it was provided with during its training and what it is given access to while it operates.

Responsibilities and Approaches

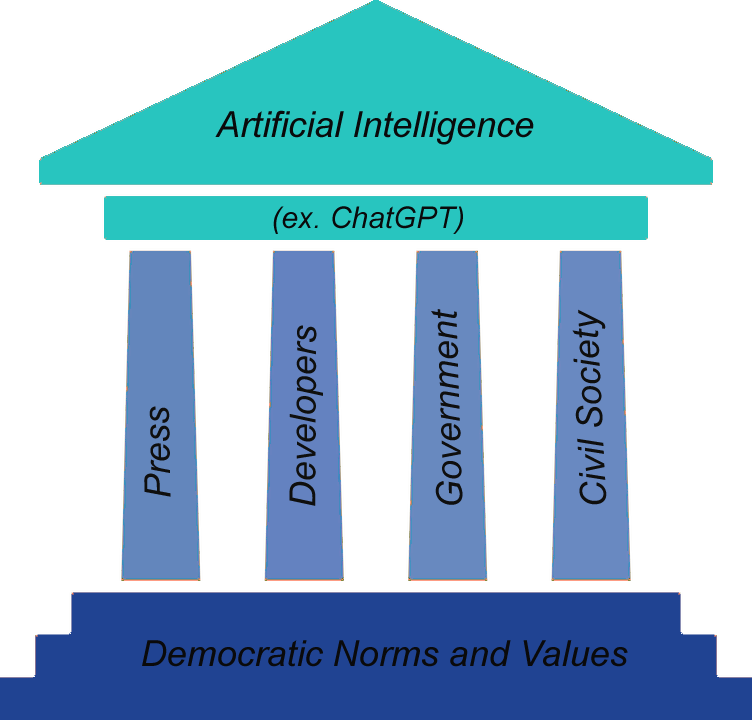

Artificial Intelligence requires a guiding hand, both during its development and application. The four categorizes, visualized as pillars, are broad and include the Press (both public and private, including their media presence as whole from classic newspapers to social media), the Developers (meaning the companies and programmers), the Government (also includes political parties as the bridge between the government and the people) and Civil Society (e.g. NGOs, registered associations, individual bloggers, people initiative). It is only applicable to democracies – or emerging democraties -, since only here an open exchange and public discourse is possible without the fear of repercussions or social sanctions on part of the government.

This fundament allows for productive discussions which seeds can grow to a bountiful harvest. Naturally, groups who are opposed to this very fundament (such as right-wing extremists) cannot – and shall not – participate to any extent. One has therefore prepare for disruption strategies on their part and attempts to destroy this fundament, as it is already the case (e.g. January 6, 2021 in the USA (groups such as the ‚Proud Boys‘), the Reichsbürger in Germany). Foreign influence needs to be accounted for as well, from Russian broadcasters like Russia Today to social bots used by China in social networks.

For a definition of these ‚hostile influences‘, see tabel at the end.

The Press

It is the responsibility of the press, as it already is – or at least is supposed to be -, to clearly state where they got their image/photo or video from. For the average person it must be open to scrutiny, for instance by embedding the link to the picture in an article or listing it at the end under a section called ’sources‘ or ‚links‘. Through this measure the publisher maintains their integrity and reputation and the reader can be rest assured that what they see did in fact happen. Moreover, said websites (like dpa – the German Press Agency/Deutsche Presse-Agentur) should also always make it clear where and when they took the photo (very likely already part of the job, just mentioning it for the sake of completeness).

The Developers

The companies developing it bear a lot of responsibility, more than anyone else does. They decide which sources and database they train the AI with, which information it has access to once it is operating and what the AI is programmed to do or not to do. Transparency is therefore key, as much as the trade secret allows (conflicts may still emerge as a result).

| What is a Trade Secret? (source: https://www.investopedia.com/terms/t/trade-secret.asp) |

| – Trade secrets are secret practices and processes that give a company a competitive advantage over its competitors (these can take a variety of forms, „such as a proprietary process, instrument, pattern, design, formula, recipe, method, or practice that is not evident to others“). – Trade secrets may differ across jurisdictions but have three common traits: not being public, offering some economic benefit, and being actively protected. – U.S. trade secrets are protected by the Economics Espionage Act of 1996.- |

The creation of an ethics committee within the company is therefore unavoidable. Their task is to document the entire development process, retroactively as well. The ethic committee, in turn, answers to the public (the press, congress/parliament, etc.). Ideally, the reasoning is written down and released as well – either in form of minutes or as part of the internal documentation.

The Government

The government is responsible for the regulatory framework, including the enforcement of an ethics committee. Just like the press, they are also obliged to show in detail when and where a photo was taken. Additionally, they should also include who wrote the statement or at least document it internally for journalists open to view.

A measure that likely needs a lot of public pressure before the government implements it, is a system that holds the government itself accountable. Any attempt – successful or not – to deceive the public through fraudulent images and text documents is to be punished.

Here, an independent organization should be formed that watches over the government and is qualified to interrogate and – if necessary – arrest politicians by revoking the privilege of Parliament – extending to ministers, head of states and other high representatives too. Only a court (most likely the supreme court of a country) is allowed to issue an arrest, in case of a state parliament it is the respective state court. The degree of the penalty is up to the court and depends on the severity of the fraud, of course (from disciplinary warning letters to prison time).

When it comes to education, tools such as ChatGPT should be made accessible. Once the technology exists, it will be used anyways. In the latter case, those who are already disadvantaged will experience more disadvantages while students with wealthier families and take full advantage of the new technology.

In lessons it could therefore be included and taught how to constructively use it, since the AI does not just repeat information but can also elaborate on it. The teacher, in turn, proofreads the essay or the answer of the AI. That way the AI nurtures critical thinking and could even help in the way of argumentation.

Civil Society

Society at large is already aware of ChatGPT, and as I’m writing this blog entry groups who talk and argue about Artifical Intelligence in this context have already formed or are in the process of being formed. It is up to the citizens to be mindful and ensure that both the press and the government always cite the details of where and when the photos were taken. It shouldn’t take more than 5 minutes for someone to trace back the photo or video.

One could say it is also an extension of the freedom of information.

When it comes to blogs like this, local groups or national and international organisation we should also recognize our responsibilities. Mistakes may still happen, but there’s no need to panic as long as the apology and revision is honest and sincere. Photos and videos created by an AI should still be designated as such to avoid confusion, especially if it is in form of a parody or other harmless projects that are protected by freedom of speech and artistic liberties.

A prominent and recent examples of an AI-created image is Amnesty International, the organization used a fake image depicting protests and police brutality in Colombia.

The documentation before Amnesty International published this fake image already raised awareness of police brutality in Colombia and contributed to a the growing acceptance of the need for reform. Hence I completey agree with Juancho Torres, who’s a photojournalist based in Bogotá. He said: “We are living in a highly polarised era full of fake news, which makes people question the credibility of the media. And as we know, artificial intelligence lies. What sort of credibility do you have when you start publishing images created by artificial intelligence?” AI-generated images would also make it easier for authoritarian or corrupt governments to dismiss anything brought forward against them.

Under these circumstances, it may be overdue for a social contract in a literal sense where the various registered associations, organizations and so on join to agree on an ethical and transparent usage of AI-generated texts and images. Back to public discourse, I personally think that it is important to discuss the risk and chances, pro and cons, of AI in an open manner. That way no one is left behind and our democracies are ideal to not just give everyone a voice – even marginalized groups – but also listen to all of the voices.

This is a future only we can mould together, if it is supposed to be a better version of the present.

| Hostile Influences |

| To fall in this category, a country, group or individual has to exhibit a constant destructive tendency. A destructive tendency is not counterproductive (having an effect that is opposite to the one intended or wanted, as defined by the Cambridge Dictionary); under a destructive tendency is to be a behaviour and attitude understood that aims to undermine elements like a scientific consensus (e.g. climate change) by spreading disinformation (deliberately spreading misinformation), threatens the fundament of democracy (e.g. norms, values, institutions) or attempts to poison public discourse (for instance by normalizing/triviliazing anti-semitism, xenophobia or else, in this case disinformation plays a major role as well). Mechanism: In order for this definition to work, long-term observations are necessary (esp. when new groups are formed or older groups radicalize themselves). For instance, confrontational behaviour on its own should not be seen as a sign for hostile influence. Grey Areas: The grey areas may differ from country to country, most notably the prejudices. Here, it depends on how they are handled by society at large and whether the discourse has already been poisoned for decades. The process of detoxiciation can be a lenghty one, spanning over decades. Prejudices on an individual basis or group basis (e.g. a political party) have to be addressed and resolved, it prerequisties good faith (meaning that it must be done in an honest and sincere way). |

Conclusion

Artificial Intelligence confronts us with new challenges and problems, but none that cannot be resolved if the political will exists.